Deferred Procedure Calls (DPCs) are a commonly used feature of Windows. Their uses are wide and varied, but they are most commonly used for what we typically refer to as “ISR completion” and are the underlying technology of timers in Windows.

If they’re so commonly used, then why are we bothering to write an entire article on them? Well, what we’ve found is that most people don’t really understand the underlying implementation details of how DPCs work. And, as it turns out, a solid understanding is important in choosing the options available to you when creating DPCs and is also a life saver in some debug scenarios.

Introduction

This article is not meant to be a comprehensive review of why or how DPCs are used. It is assumed that the reader already knows what a DPC is or, even better, has used them in a driver. If you do not fall into this category, information at that level is readily available on MSDN.

In addition, Threaded DPCs, which are a special type of DPC available on Windows Vista and later, will not be covered in any detail.

As a basis of our discussion, let’s briefly review some basic DPC concepts.

A working definition of DPCs is that they are a method by which a driver can request a callback to an arbitrary thread context at IRQL DISPATCH_LEVEL. The DPC object itself is nothing more than a data structure with a LIST_ENTRY, a callback pointer, some context for the callback, and a bit of control data:

typedef struct _KDPC {

UCHAR Type;

UCHAR Importance;

USHORT Number;

LIST_ENTRY DpcListEntry;

PKDEFERRED_ROUTINE DeferredRoutine;

PVOID DeferredContext;

PVOID SystemArgument1;

PVOID SystemArgument2;

__volatile PVOID DpcData;

} KDPC, *PKDPC, *PRKDPC;

You initialize a DPC Object with KeInitializeDpc and queue the DPC Object with KeInsertQueueDpc. Drivers that use DPCs to perform more extensive work than is appropriate for an Interrupt Service Routine typically use the DPC Object that’s embedded in the Device Object, and cause this DPC Object to be queued by calling the function IoRequestDpc (which internally calls KeInsertQueueDpc). Once queued, at some point in the future your DPC routine is invoked from an arbitrary thread context at IRQL DISPATCH_LEVEL.

With that basic info in hand, we can now cover the gory details of both the queuing and delivery mechanisms that are used for DPCs. That will lead us to discussing what options we have for controlling the behavior of DPCs and what impact those options have.

DPC Queuing

As mentioned previously, DPCs are queued (directly or indirectly) via the KeInsertQueueDpc DDI:

NTKERNELAPI

BOOLEAN

KeInsertQueueDpc (

__inout PRKDPC Dpc,

__in_opt PVOID SystemArgument1,

__in_opt PVOID SystemArgument2

);

DPCs are actually queued to a particular processor, which is accomplished by linking the DPC Object into the DPC List that’s located in the Processor Control Block (PRCB) of the target processor. Determining the processor to which the DPC Object is queued is fairly easy for the O/S. By default, the DPC is queued to the processor from which KeInsertQueueDpc is called (the “current processor”). However, a driver writer can indicate that a given processor be used for a particular DPC object, using the function KeSetTargetProcessorDpc.

Viewing the DPC List on a particular processor is easy using WinDBG. While the DPC List is actually contained within the PRCB, the PRCB is an extension of the Processor Control Region (PCR). By viewing the PCR with the !pcr command we can see any DPCs currently on the queue for that processor:

0: kd> !pcr 0

KPCR for Processor 0 at ffdff000:

Major 1 Minor 1

NtTib.ExceptionList: 8054f624

NtTib.StackBase: 805504f0

NtTib.StackLimit: 8054d700

NtTib.SubSystemTib: 00000000

NtTib.Version: 00000000

NtTib.UserPointer: 00000000

NtTib.SelfTib: 00000000

SelfPcr: ffdff000

Prcb: ffdff120

Irql: 00000000

IRR: 00000000

IDR: ffffffff

InterruptMode: 00000000

IDT: 8003f400

GDT: 8003f000

TSS: 80042000

CurrentThread: 8055ae40

NextThread: 81bc0a90

IdleThread: 8055ae40

DpcQueue: 0x8055b4a0 0x805015ae [Normal] nt!KiTimerExpiration

0x81b690a4 0xf9806990 [Normal] atapi!IdePortCompletionDpc

0x818a12cc 0xf96c5ee0 [Normal] NDIS!ndisMDpcX

One aspect of DPCs to note is that once a DPC Object has been queued to a processor, subsequent attempts to queue the same DPC Object are ignored until the DPC Object has been dequeued (by Windows for execution of its callback). This is what the BOOLEAN return value of KeInsertQueueDpc indicates: TRUE means that Windows queued the DPC to the target processor and FALSE means that the DPC Object is already queued to some processor. This makes sense from a programming perspective, as the DPC data structure only has a single LIST_ENTRY field and thus can only appear on a single queue at a time.

What About Priority?

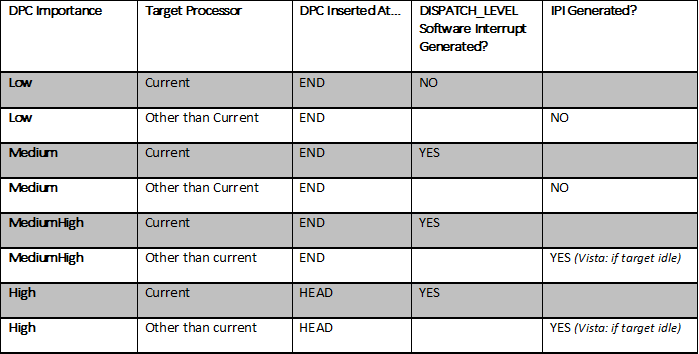

Where the DPC is placed on the target processor’s DPC List is an interesting question. Whether a DPC Object is inserted at the beggining or end of the target processor’s DPC List is one aspect of the priority feature of DPCs. You can set the importance of a given DPC Object by using the function KeSetImportanceDpc. This DDI lets you indicate that the DPC object is low, medium, or high importance. Also, in Vista and later you can set the importance to “medium high.” Low, medium, and medium high importance DPCs are placed at the end of the DPC queue, while high importance DPCs are placed at the front of the queue. You may ask yourself at this point, “then what’s the difference between low, medium, and medium high?” We’ll answer that question shortly.

The DISPATCH_LEVEL Software Interrupt

Once the DPC has been queued to the target processor, a DISPATCH_LEVEL software interrupt is typically generated on the processor. The choice of whether or not to request the DISPATCH_LEVEL software interrupt when the DPC Object is queued is largely based on four factors: the importance of the DPC, the target processor of the DPC, the depth of the DPC List on the target processor, and “drain rate” of the DPC List on the target processor.

If the target processor for the DPC Object is the current processor, the DISPATCH_LEVEL software interrupt is requested if the DPC Object is of any importance other than low. For low importance DPCs, the software interrupt is only requested if the O/S believes that the processor is not servicing DPCs fast enough, either because the DPC queue has become large or is not draining at a sufficiently fast rate. If either of those are true, the interrupt is requested even if the DPC is low importance.

If the target processor for the DPC Object is not the current processor, the decision process is different. Because requesting an interrupt on the other processor will involve a costly Inter Processor Interrupt (IPI), the situations under which it is requested are restricted. Prior to Vista, the IPI request would only be made if the DPC was high importance or if the DPC queue on the target processor had become too deep. Vista added the medium high importance DPCs to the check and went one step further to cut down the number of IPIs by requiring the target processor to be idle for the DISPATCH_LEVEL software interrupt to be requested (See Table 1 for a high-level breakdown).

DPC Delivery

Once the DPC has been queued to the processor, at some point it must be dequeued and the callback executed. Remember that there were two scenarios that occurred after the DPC was queued to the processor, either the DISPATCH_LEVEL software interrupt was requested or it was not.

Delivery from the Software Interrupt Service Routine

To keep things relatively simple, we’ll restrict our discussion here to the case of queuing the DPC Object to the current processor. Let’s start with the case in which the IRQL DISPATCH_LEVEL software interrupt was requested. At the time KeInsertQueueDpc was called, there are two situations the system could be in: The first would be running at an IRQL < DISPATCH_LEVEL, in which case the DISPATCH_LEVEL interrupt would be delivered immediately. The second case would be if the current processor is at IRQL >= DISPATCH_LEVEL, in which case the interrupt would remain pending until the IRQL was about to return to an IRQL < DISPATCH_LEVEL.

In either case, once the service routine for the DISPATCH_LEVEL interrupt begins executing, it checks to see if any DPCs are queued to the current processor. If the DPC queue is not empty, Windows will loop and entirely drain the DPC List before returning from the service routine.

Before draining the DPC List, Windows wants to ensure that it has a fresh execution stack for the DPC routines to run on. This will presumably cut down the incidents of stack overflows in the case where the current stack does not have much space remaining. Thus, every PRCB also contains a pointer to a previously allocated DPC stack that Windows switches to before calling any DPCs:

0: kd> dt nt!_KPRCB DpcStack +0x868 DpcStack : Ptr32 Void

We can see evidence of the switch in the debugger if we set a breakpoint in a DPC routine. Here we chose a DPC from the ATAPI driver:

0: kd> bp atapi!IdePortCompletionDpc 0: kd> g Breakpoint 1 hit atapi!IdePortCompletionDpc: f9806990 8bff mov edi,edi 0: kd> k ChildEBP RetAddr f9dc7fcc 80544e5f atapi!IdePortCompletionDpc f9dc7ff4 805449cb nt!KiRetireDpcList+0x61 f9dc7ff8 f9a2b9e0 nt!KiDispatchInterrupt+0x2b WARNING: Frame IP not in any known module. Following frames may be wrong. 805449cb 00000000 0xf9a2b9e0

Notice the strange call stack – it seems to disappear after the call to KiDispatchInterrupt. The problem is that WinDBG has ceased to be able to unwind the stack due to the stack switch, and the call stack that we see here is the call stack for the DPC stack. If we try to match the EBP addresses shown with the stack limits of the current stack we will see the discrepancy:

0: kd> !thread

THREAD 81964770 Cid 028c.02b8 Teb: 7ffd8000 Win32Thread: e1873008 RUNNING on processor 0

IRP List:

8195b870: (0006,0190) Flags: 00000970 Mdl: 00000000

819128b0: (0006,0190) Flags: 00000970 Mdl: 00000000

Not impersonating

DeviceMap e1001980

Owning Process 818d5978 Image: csrss.exe

Attached Process N/A Image: N/A

Wait Start TickCount 6779 Ticks: 0

Context Switch Count 4104 LargeStack

UserTime 00:00:00.000

KernelTime 00:00:00.265

Start Address 0x75b67cd7

Stack Init f9a2c000 Current f9a2ba58 Base f9a2c000 Limit f9a29000 Call 0

Note that the EBP addresses do not fall within the base and limit of the current thread’s stack. Using the techniques outlined in last issue’s Debugging Techniques: Take One…Give One article (September-October 2008), you can actually get the original call stack of the interrupted thread back. This can be useful as it sometimes can provide key information as to what kicked off a chain of events on that processor.

Once the O/S has switched stacks, it begins dequeuing DPC objects and then executing the call backs. Thus, while your DPC routine is executing, it is no longer on any DPC queue and may be queued again.

Delivery from the Idle Thread

But what about those low importance DPCs or targeted DPCs that didn’t request the DISPATCH_LEVEL software interrupt? Who processes those? Well, there are actually two ways in which they’ll be processed. Either another DPC will come along that will request the DISPATCH_LEVEL interrupt and the DPC will be picked up on the subsequent drain, or the idle loop will come along and notice that the DPC queue is not empty.

Part of the idle loop’s work is to check the DPC queue and determine if it is empty or not. If it finds that the queue is not empty, it begins draining the queue by dequeuing entries and calling the callbacks. We can see this in a different call stack but using the same DPC routine as the previous example:

Breakpoint 1 hit atapi!IdePortCompletionDpc: f9806990 8bff mov edi,edi 0: kd> k ChildEBP RetAddr 80550428 80544e5f atapi!IdePortCompletionDpc 80550450 80544d44 nt!KiRetireDpcList+0x61 80550454 00000000 nt!KiIdleLoop+0x28

The difference here is that in this case the stack is not switched, thus the DPCs actually executed on the idle thread’s stack. Because the idle loop uses so little thread stack itself, there is not much use in going through the effort of swapping stacks in this case.

Conclusion

Hopefully this cleared up a few misconceptions about DPCs and how they are handled by the system.