Last reviewed and updated: 10 August 2020

Serialization, the ability to do one or more of lines of code atomically, is a vital issue in WDF drivers. In fact, due to the inherently pre-emptible, reentrant, and multiprocessing nature of Windows, getting synchronization right is critical for any kernel-mode module.

WDF provides numerous options for serializing access to shared data structures. One of those options is Synchronization Scope, which most people refer to as “Sync Scope.” In this article, we’re going to examine the concept of Sync Scope. We’ll talk about how it works, the complexities it introduces, and when it might be the most appropriate option for implementing serialization in a WDF driver.

What’s The Problem?

Just to be sure we all understand the problem that proper serialization solves, let’s look at an example. If you’re already well versed in writing multi-threaded/multi-processor safe code, feel free to skip this section. If you wonder about when and why we need proper serialization in Windows drivers, read on.

Suppose we maintain a block of statistics in our driver’s Device Context such as shown in Figure 1.

typedef struct _MY_DEVICE_CONTEXT {

WDFDEVICE DeviceHandle;

...

//

// Statistics

// We keep the following counts/values for our device

//

LONG TotalRequestsProcessed;

LONG ReadsProcessed;

LONGLONG BytesRead;

LONG WritesProcessed;

LONGLONG BytesWritten;

LONG IoctlsProcessed;

...

} MY_DEVICE_CONTEXT, *PMY_DEVICE_CONTEXT;

To maintain these statistics, our driver would increment and update the appropriate files in each of its appropriate EvtIoXxx Event Processing Callbacks, as shown in Figure 2.

VOID

MyEvtIoWrite(WDFQUEUE Queue,

WDFREQUEST Request,

size_t Length)

{

NTSTATUS status = STATUS_SUCCESS;

WDFDEVICE device = WdfIoQueueGetDevice(Queue);

PMY_DEVICE_CONTEXT context;

PVOID buffer;

PVOID length;

//

// Get a pointer to my device context

//

context = MyGetContextFromDevice(device);

//

// Get the pointer and length to the requestor's data buffer

//

status = WdfRequestRetrieveInputBuffer(device,

MY_MAX_LENGTH,

&buffer,

&length);

if (!NT_SUCCESS(status)) {

// ... do something ...

}

//

// Update our device statistics

//

context->TotalRequestsProcessed++;

context->WritesProcessed++;

context->BytesWritten += length;

//

// ... rest of function ...

return;

}

On entering this routine, we get a pointer to our Device’s context structure, and then get a pointer to the user’s data buffer and its length. We then update all three relevant statistics fields. After this, we presumably go on to do the rest of the work this driver needs to do.

But there’s a problem with the statistics code in this routine as written. That problem is that there’s no serialization performed when the statistics are updated. Consider what could happen if we get unlucky, and our EvtIoRead function (for the same device) is running at the same time on two processors and tries to update the statistics block. It’s possible that we get the following sequence of events:

- The code running on Processor 0 reads the value for TotalRequestsProcessed from the Device Context. Let’s say that value is currently 0.

- Right at the same time, the code running on Processor 1, which also is executing our EvtIoRead function and trying to update the statistics, also reads the value for TotalRequestsProcessed from the Device Context. It’s also going to read a value of 0.

- The code on Processor 0 increments the count, and writes it back to the Device Context. TotalRequestsProcessed is now 1.

- The code running on Processor 1 does the same thing: It increments the count it read, and writes it back to the Device Context. TotalRequestsProcessed is now (still) 1.

The result from the above sequence of events is obviously incorrect. The reason it’s wrong is because both copies of the EvtIoXxx read the original value for TotalRequestsProcessed at the same time (or, very close to the same time), add 1 to it, and write it back.

The way we fix this problem is to apply serialization. We guard the device statistics in our Device Context with a lock. This lock has the characteristic that it can only be held by one thread at a time, and any other requestors who try to acquire the lock while it’s held by another thread wait until the lock is available. We make a rule in our driver that anytime you need to update the data in the statistics block, you must be holding the lock. Thus, the new steps would be as follows:

- The code running on Processor 0 successfully acquires the lock guarding the statistics block.

- The code running on Processor 0 reads the value for TotalRequestsProcessed from the Device Context. Let’s say that value is currently 0.

- The code running on Processor 1 (which also is executing our EvtIoRead function and trying to update the statistics) attempts to acquire the lock guarding the statistics block, but waits because the lock is already held by the code running on Processor 0.

- The code on Processor 0 increments the count, and writes it back to the Device Context. TotalRequestsProcessed is now 1.

- The code running on Processor 0 releases the lock

- The code running on Processor 1, which was waiting for the lock, now acquires it (now that the lock has been released by the code running on Processor 0).

- The code running on Processor 1 reads the value for TotalRequestsProcessed from the Device Context. It read the updated value of 1.

- The code running on Processor 1 increments the count it read, and writes it back to the Device Context. TotalRequestsProcessed is now 2.

- The code running on Processor 1 releases the lock.

And the result is now… correct. Yay!

Why Proper Serialization is Hard

The concept that a given lock guards a series of fields in a structure is enforced only by the engineering discipline of the devs working on the driver. In other words, there’s no Windows or WDF feature that can tell you “Hey, dude! You forgot to acquire the lock and you’re updating this structure that’s supposed to be protected!” While there are SAL annotations that can help with this, there is presently no 100% reliable method for enforcing your locking policy. Therefore, the onus is on you – the driver developer – to properly and consistently implement whatever locking policy you create.

Thus, there are several challenges to using serialization properly. Among them are:

- You, the developer, must recognize where serialization is needed in your driver code.

- Once you recognize where serialization is needed, you must choose the right type of lock (there are several different lock types) to use for that serialization.

- Having chosen the right type of lock, you need to be sure you acquire and release the lock properly, in every single place in your driver where serialization is required. Not locking when you should will lead to unpredictable results.

If you’re not used to writing code that is multiprocessor and multithread safe, the above issues might seem daunting. And, trust me, getting your locking right can be quite a challenge, even for experienced devs. But, once you’ve gotten enough practice, identifying most potential “race conditions” (sequences that need to be performed atomically) will become second nature to you.

Introducing: Sync Scope

The above challenges are precisely the issues that WDF tries to solve with the concept of Sync Scope. Sync Scope provides a simple serialization scheme that seeks to relieve driver devs of some of the burdens of implementing proper serialization.

With Sync Scope, you simply tell WDF that you want the common callbacks associated with your WDFQUEUE, WDFFILEOBJECT, and WDFREQUEST to be serialized. As a result, the Framework will choose a lock type to use, and automatically acquire that lock before these routines are called in your driver and will release that lock when your driver returns from these routines.

You tell WDF the granularity of the locking that you want in your driver when you specify the Sync Scope. You may choose Sync Scope Device or Sync Scope Queue. In the next sections, we’ll talk about each of these two options.

Choosing Sync Scope Device

When you choose Sync Scope Device you’re telling WDF that you want all the applicable Event Processing Callbacks in your driver to be serialized at the Device level. This means that if you have multiple Queues per Device (say one Queue that handles both reads and writes and another Queue that handles device controls) you do not want any EvtIoXxx Event Processing Callbacks for the same device to be executing at the same time, regardless of the Queue with which the Callback is associated.

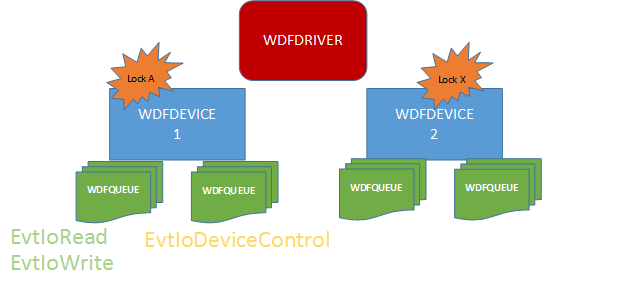

When you choose Sync Scope Device, WDF maintains a lock at the Device level. Before calling any applicable Queue, Request, or File Object related Event Processing Callback, WDF acquires this lock. When your driver returns from one of these callbacks, WDF releases the lock. The locking structure is shown in Figure 3.

So, in the scheme shown in Figure 3, none of the indicated EvtIoXxx Event Processing Callbacks (EvtIoRead, EvtIoWrite, EvtIoDeviceControl) for a given device would run in parallel. The Framework would automatically serialize these routines, acquiring a lock before they’re called and releasing it afterwards. However, EvtIoXxx Event Processing Callbacks for WDFDEVICE 1 and WDFDEVICE 2 could run in parallel. The Framework is only serializing callbacks within a given device, not across all devices for a given driver.

Choosing Sync Scope Queue

If you select Sync Scope Queue you tell WDF that you want the applicable Queue and Request Event Processing Callbacks to be serialized on a per Queue basis. So, if you have multiple Queues the Event Processing Callbacks associated with each specific Queue will be serialized. So, for example, let’s once again assume you have one Queue that handles both reads and writes, and another Queue that handles IOCTLs. In this scheme, the callbacks for read and write will never run in parallel because they are serviced by the same Queue. However, either EvtIoRead or EvtIoWrite can be running at the same time as EvtIoDeviceControl, because read and write are handled by one Queue and device control requests are handled by a different Queue.

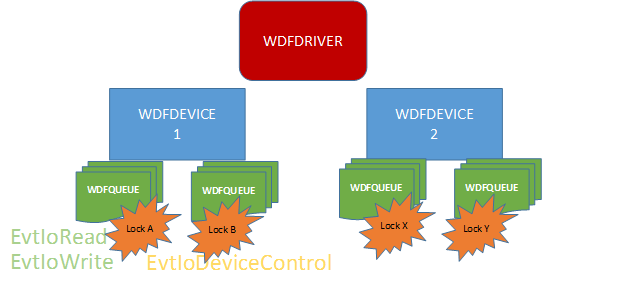

When you select Sync Scope Queue, WDF maintains a lock for each Queue you create. Before calling any applicable Queue or Request related Event Processing Callbacks, WDF acquires the lock for the associated Queue. This ensures that any callbacks that are associated with a given Queue (and, hence, share the same lock) will never run at the same time. When your driver returns from one of these callbacks, WDF releases the lock. This is illustrated in Figure 4.

Thus, in the scheme shown in Figure 4, the EvtIoRead and EvtIoWrite Event Processing Callbacks would never run at the same time for a given device, because they are associated with the same Queue, and Sync Scope Queue has been specified. However, either of these callbacks (EvtIoRead or EvtIoWrite) could run at the same time as EvtIoDeviceControl. This is because WDF maintains the Sync Scope lock at the Queue level. And, of course, EvtIoRead (or any Event Processing Callbacks) for WDFDEVICE 1 could once again be running at the same time as EvtIoRead (or any Event Processing Callback) for WDFDEVICE 2. This is because locking is on a per Queue basis, and a given Queue is always associated with a single WDFDEVICE.

How to Select Sync Scope

You specify the desired Sync Scope before creating either your WDFDEVICE or WDFQUEUE Object. Sync Scope is specified in the SynchronizationScope field of the WDF_OBJECT_ATTRIBUTES structure. For example, if you want the applicable Event Processing Callbacks in your driver to be serialized on a per-device basis, you specify WdfSynchronizationScopeDevice as shown the example code in Figure 5.

// ... beginning part of EvtDriverDeviceAdd ...

WDF_OBJECT_ATTRIBUTES_INIT_CONTEXT_TYPE(&attributes,

MY_DEVICE_CONTEXT);

//

// Ensure that all our EvtIoXxx callbacks never run in parallel

//

attributes.SynchronizationScope = WdfSynchronizationScopeDevice;

status = WdfDeviceCreate(&DeviceInit, &attributes, &device);

if (!NT_SUCCESS(status))

{

// ... whatever ...

goto Exit;

}

// ... rest of function ...

If you don’t want to use Sync Scope Device, you can specify WdfSynchronizationScopeQueue in the WDF_OBJECT_ATTRIBUTES structure that is specified when you create any WDF Queue. This allows you to use Sync Scope Queue for only a subset of Queues if you so desire.

As to which Event Processing Callback functions are serialized, for Sync Scope Device the following functions are automatically handled:

- EvtDeviceFileCreate

- EvtFileCleanup

- EvtFileClose

- EvtIoRead

- EvtIoWrite

- EvtIoDeviceControl

- EvtIoDefault

- EvtIoInternalDeviceControl

- EvtIoStop

- EvtIoResume

- EvtIoQueueState

- EvtIoCanceledOnQueue

- EvtRequestCancel

For Sync Scope Queue, all the above functions except those associated with WDFFILEOBJECTs (shown in blue, and named EvtIoFileXxx or EvtDevFileXxx) are serialized. Note that there are several interaction issues regarding Sync Scope and IRQL, which we’ll discuss later in this article.

Love Sync Scope? Want More?

If you like the idea of Sync Scope, but you’re thinking it would be nice if you could expand the functions to which Sync Scope applies, there’s yet another feature you should be aware of: Automatic Serialization. You can optionally extend the serialization provided by either of the Sync Scopes to three other callbacks:

- The callback from a given WDFTIMER

- The callback for a given WDFWORKITEM

- The DpcForIsr associated with a given device

You can elect this option by setting the AutomaticSerialization field of the appropriate Object configuration structure to TRUE. To serialize execution of your DpcForIsr, you set the AutomaticSerialization field for the WDF_INTERRUPT_CONFIG structure to TRUE. In fact, it’s worth noting that AutomaticSerialization is set to TRUE by default for WDFTIMER and WDFWORKITEM Objects. So your EvtTimerFunc Event Processing Callbacks and your EvtWorkItemFunc Event Processing Callbacks will, by default, be serialized at the Device level if you’re using Sync Scope Device, and will be automatically be serialized at the Queue level if you make the Queue the parent of the created WDFTIMER or WDFWORKITEM.

Using Sync Scope: Life Is Good?

So, is Sync Scope the solution to all the serialization problems we could ever encounter in a driver? Maybe. It certainly simplifies your life as a driver dev. For example, regardless of the number of Queues configured, setting Sync Scope to Device prior to the creation of our Device Object will “fix” the statistics management example shown in Figure 1. If we parent any relevant Work Items or Timers on our Device Object, we’ll even be able to safely manipulate the statistics in those callbacks, assuming we leave AutomaticSerialization set to TRUE when we create the Objects. And, if we needed to manipulate the statistics from within our DpcForIsr, all we’d have to do is set AutomaticSerialization to TRUE when we create our driver’s Interrupt Object. So life is indeed easy. Using Sync Scope makes these serialization issues “just go away.”

It’s important to recognize that even if your driver has a single Queue and that Queue uses Sequential Dispatching, Sync Scope can still prove to be quite useful. For example, Sync Scope can certainly be helpful when you’re using a single Queue with Sequential dispatching but have a dead man timer or background work item that could fire at any time while processing that one Request. Either of these activities (the timer or work item) could race with your Request processing or completion code. So, Sync Scope to the rescue!

But there are some less than wonderful things about using Sync Scope as well. The most obvious downside is that it doesn’t allow any part of the applicable callbacks to run in parallel within their Sync Scope. Thus, even if the only place we need serialization in our EvtIoXxx Event Processing Callbacks is when we update our statistics block (as shown in Figure 1), all the code in our EvtIoXxx Event Processing Callback runs under lock. And the amount of code running under serialization only increases the more we expand Sync Scope through the use of AutomaticSerialziation.

For an example, let’s look at how using Sync Scope Device affects your driver and device’s overall processing. What Sync Scope Device means for your EvtIoXxx functions is that only one of your EvtIoXxx Event Processing callbacks can be running at a time for a given device. Consider the case when your device can have one read and one write in progress simultaneously. In this case, you would most likely have two Queues using Sequential Dispatching – one for reads and another for writes. Specifying Sync Scope Device means that while your device can still have two Requests active at the same time, the EvtIoXxx Event Processing Callbacks initiating those Requests could never be running simultaneously. This limits your devices performance and throughput. When you expand your Sync Scope using AutomaticSerialization to include your DpcForIsr, your driver can now only be initiating a single I/O request (a read or a write) or completing a single I/O request (of either type) at one time, regardless of how many processor cores there are in the system or what those cores are doing. Consider the possible impact of such a scheme to a driver for a Programmed I/O type device: All the time your driver is moving data to or from your device (using WRITE_REGISTER_BUFFER_UCHAR, for example) you’re holding the lock, and your driver is prevented from initiating or completing any other Requests for that device. Not good if you have a device where throughput or maximizing device utilization or reducing Request processing latency is important. Not good at all.

Of course, if your device is slow and your driver doesn’t do much to initiate or complete Requests, Sync Scope Device might not be such a terrible thing no matter how many Requests it can have in progress at the same time.

Performance Isn’t the Only Concern

Unfortunately, potential lack of performance and lower device utilization due to over-serialization aren’t the only issues with Sync Scope. The real complexity of using Sync Scope has to do with IRQL.

As we’ve discussed previously, the primary feature of Sync Scope is that the Framework automatically acquires and releases a lock around its calls to the applicable callbacks in your driver. But another important feature of Sync Scope is that WDF automatically selects the appropriate type of lock to use to guard those callbacks. And it’s this second feature, selecting the appropriate lock type, which can become complicated and cause unexpected consequences.

Recall that by default WDF is allowed to call your driver’s EvtIoXxx Event Processing Callbacks at any IRQL <= DISPATCH_LEVEL. As a result, when you select a Sync Scope, WDF defaults to selecting a spin lock as the type of lock it will use to serialize your callbacks.

Now, some of you might be jumping to conclusions and saying “A spin lock! Oh wow… that’s a pretty heavy-weight lock to use!” But, in fact spin locks are pretty fast to acquire and release. Consider that acquiring almost any other type of lock involves acquiring and releasing a spin lock for internal serialization purposes. Compared to most other locks, you could say that spin locks are “high speed, low drag.” However, there are two particular issues that using a spin lock creates.

The first issue is that the code running while a spin lock is held (that is, between the acquire and release) runs at IRQL DISPATCH_LEVEL. Code running at this IRQL is not subject to preemption. This means that, even in the face of quantum exhaustion, a thread running at IRQL DISPATCH_LEVEL will continue running until the IRQL is lowered. This is as it should be, because – by design – code sequences running at IRQL DISPATCH_LEVEL should be short. How short? According to the WDK docs, “a typical DPC routine should run for no more than 100 microseconds” (from the doc page for KeQueryDpcWatchdogInformation). This is because DPC routines run at IRQL DISPATCH_LEVEL – no other reason.

Thus when you use Sync Scope and WDF chooses a spin lock, depending on the processing you need to do in your EvtIoXxx routine your driver might wind up spending quite a bit of time at IRQL DISPATCH_LEVEL. And while routines like your DpcForIsr always architecturally mustrun at IRQL DISPATCH_LEVEL, your EvtIoXxx Event Processing Callbacks only might run at IRQL DISPATCH_LEVEL. If you set Sync Scope, by default your EvtIoXxx routines will always run at IRQL DISPATCH_LEVEL. We’ll talk about when and how you might choose to override the defaults later in this article.

Note that running at IRQL DISPATCH_LEVEL won’t by itself necessarily hurt the throughput of your driver (assuming you don’t count the throughput and device utilization losses inherent in running under lock that we discussed earlier). However, spending an excessive amount of time at IRQL DISPATCH_LEVEL can negatively impact the performance of the overall system. When running at DISPATCH_LEVEL you’re basically preventing the Windows scheduler from doing its job. You’re monopolizing a core for your use, as your DISPATCH_LEVEL code continues running until it’s done.

Keeping It Consistent

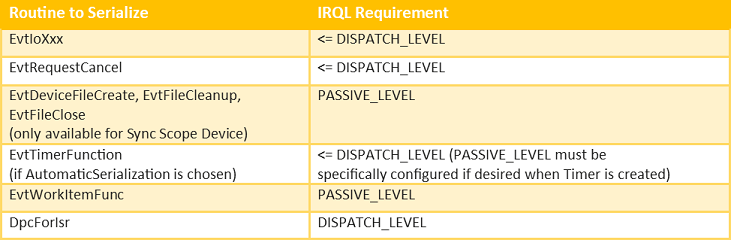

The second issue that using a spin lock for Sync Scope serialization causes, involves expanding your serialization domain using AutomaticSerialization. You must be sure that your chosen Sync Scope, plus your choices of routines to which you want to extend that Sync Scope with AutomaticSerialization, plus the IRQL that the Framework’s choice of locking implies, are all consistent and proper. What do I mean? Well, take a look at Table 1 for starters.

Table 1 shows the various callbacks that Sync Scope can serialize and the IRQL requirements imposed by those callbacks. A careful look at this table will show that there is no single type of lock that can be used that will successfully serialize all potential callbacks. For example, if you let the Framework choose the default spin lock, that will work for your EvtIoXxx Event Processing Callbacks but that cannot work for File Object related callbacks or your Work Item callbacks. This is because acquiring a spin lock raises the IRQL to DISPATCH_LEVEL, and the File Object and Work Item related callbacks all must run at IRQL PASSIVE_LEVEL.

Changing the Default Framework Lock Selection

So what can you do if you want to use Sync Scope and (for example) you need to extend that Sync Scope to your File Object or Work Item related callbacks? The answer is that you need to influence the Framework to choose a lock type that’s compatible with the callbacks that you want to serialize.

The Framework choses the type of lock type to use based on the constraints imposed on your EvtIoXxx Event Processing Callbacks. As we’ve now said multiple times, these callbacks by default can run at IRQL DISPATCH_LEVEL or below, and therefore the Framework meets this constraint by choosing a spin lock to implement Sync Scope. You may already be aware that you can constrain your EvtIoXxx Event Processing Callbacks to run at IRQL PASSIVE_LEVEL by specifying an Execution Level constraint when you create your WDFDEVICE. Execution Level is a constraint that is specified in the WDF_OBJECT_ATTRIBUTES structure you specify when your WDFDEVICE is being created. You specify an Execution Level constraint by filling in the ExecutionLevel field as shown in Figure 6. You can see in Figure 6 we’ve set ExecutionLevel to WdfExecutionLevelPassive. This will cause the Framework to always call your EvtIoXxx routines at IRQL PASSIVE_LEVEL.

// ... beginning part of EvtDriverDeviceAdd ...

WDF_OBJECT_ATTRIBUTES_INIT_CONTEXT_TYPE(&attributes,

MY_DEVICE_CONTEXT);

//

// Ensure that all our EvtIoXxx callbacks never run in parallel

//

attributes.SynchronizationScope = WdfSynchronizationScopeDevice;

attributes.ExecutionLevel = WdfExecutionLevelPassive;

status = WdfDeviceCreate(&DeviceInit, &attributes, &device);

if (!NT_SUCCESS(status))

{

// ... whatever ...

goto Exit;

}

// ... rest of function ...

When you set a PASSIVE_LEVEL constraint on the Execution Level of your EvIoXxx functions and you specify Sync Scope, the Framework will take note of your specified Execution Level constraint and use a Mutex to implement Sync Scope. Thus, before calling any of the routines within your Sync Scope, the Framework will acquire the Mutex. And when that routine exists, the Framework will release the Mutex. A Mutex is a type of Windows Dispatcher Object. As a result, any code that runs after the Mutex is acquired and before the Mutex is released runs at IRQL PASSIVE_LEVEL.

Thus, if you specify a PASSIVE_LEVEL constraint on your EvtIoXxx functions, and you specify a Sync Scope, you can now extend that Sync Scope to any of the routines shown in Table 1 that can be called at IRQL PASSIVE_LEVEL. Of course, the problem is now you can’t extend your Sync Scope (through the use of Automatic Serialization) to your DpcForIsr, because that must run at IRQL DISPATCH_LEVEL!

Sigh. No size fits all, in this case.

But is That What You WANT?

Specifying IRQL PASSIVE_LEVEL as an Execution Level contraint on your EvtIoXxx functions has another effect, of course. And that effect is that any Requests that arrive at your driver at IRQL DISPATCH_LEVEL (such as those sent by another driver) will have to be deferred until the Framework calls the appropriate EvtIoXxx function in your driver at IRQL PASSIVE_LEVEL. The way the Framework does this is it passes the Request to an internal work item that runs at IRQL PASSIVE_LEVEL and calls the appropriate EvtIoXxx callback in your driver.

And thus we have yet one more item that the use of Sync Scope can impact. If all you want to do is extend Sync Scope to include Work Items, you have to set an IRQL PASSIVE_LEVEL constraint on the Execution Level of your EvtIoXxx routines. This, in turn, results in the framework having to defer the processing of any Requests that arrive at IRQL DISPATCH_LEVEL to a work item (instead of having them call directly into your EvtIoXxx callback). If you’re a device driver, this also means that if you call WdfRequestComplete from your DpcForIsr, and doing so can trigger the Framework to present your driver another Request, the Framework will be forced to use a work item to call your EvtIoXxx Event Processing Callback instead of possibly calling you directly. Neither of these things will be a problemif most or all of your Requests arrive directly from user-mode and/or you complete most Requests at IRQL DISPATCH_LEVEL. In both of these cases your driver will already be running at IRQL PASSIVE_LEVEL, and so the Framework need not do any additional processing. But what if you’re writing an intermediate driver, and you get most of your work from other drivers in the system? Or if you’re a device driver that completes the majority of your Requests in your DpcForIsr? Forcing an IRQL PASSIVE_LEVEL contraint can introduce additional overhead and latency that you don’t want.

The Answer Is…

Hopefully, you can now see that while Sync Scope might initially look like a simple solution to a complex problem, it can actually be quite complex when you look at the details. So, what are the alternatives?

The main alternative to using Sync Scope to guard various data structures is to do manual serialization. This means that you manually choose a lock, and ensure you acquire and release it properly as required. As previously mentioned, with a little practice this will become almost second-nature to you as a developer. The “trick” (if you can even call it that) is to only hold the lock during the specific code sequences in which you manipulate the guarded data structures.

The topic of chosing and using serialization primitives in Windows drivers requires its own article, if not its own book. We’ll write a much more detailed article about serialization in a future issue of The NT Insider. However, without going into too much detail or discussing too many options, we can provide a brief glimpse at one of the simplest solutions.

The simplest solution to the example problem shown in Figures 1 and 2, is that we could properly serialize access to the statistics in our driver using a spin lock. To do this, we would add the definition of a lock (we’ll use a spin lock) to our Device Context previously shown in Figure 1. This updated version is shown in Figure 7.

typedef struct _MY_DEVICE_CONTEXT {

WDFDEVICE DeviceHandle;

...

//

// Statistics

// We keep the following counts/values for our device

//

KSPIN_LOCK StatisticsLock;

LONG TotalRequestsProcessed;

LONG ReadsProcessed;

LONGLONG BytesRead;

LONG WritesProcessed;

LONGLONG BytesWritten;

LONG IoctlsProcessed;

...

} MY_DEVICE_CONTEXT, *PMY_DEVICE_CONTEXT;

As you can see in Figure 7, we simply reserve space for a KSPIN_LOCK structure in our Device Context in a field we’ve named StatisticsLock. Note that the spin lock structure needs to be initialized before its first use (probably in EvtDriverDeviceAdd, just after the WDFDEVICE has been created) by calling KeInitializeSpinLock.

We would then update the code originally shown in Figure 2 to acquire the spin lock before we update the statistics and release the spin lock immediately afterwards. This is shown in Figure 8.

VOID

MyEvtIoWrite(WDFQUEUE Queue,

WDFREQUEST Request,

size_t Length)

{

NTSTATUS status = STATUS_SUCCESS;

WDFDEVICE device = WdfIoQueueGetDevice(Queue);

PMY_DEVICE_CONTEXT context;

PVOID buffer;

PVOID length;

KIRQL oldIrql;

//

// Get a pointer to my device context

//

context = MyGetContextFromDevice(device);

//

// Get the pointer and length to the requestor's data buffer

//

status = WdfRequestRetrieveInputBuffer(device,

MY_MAX_LENGTH,

&buffer,

&length);

if (!NT_SUCCESS(status)) {

// ... do something ...

}

//

// Update our device statistics

//

KeAcquireSpinLock(&context->StatisticsLock,

&oldIrql);

context->TotalRequestsProcessed++;

context->WritesProcessed++;

context->BytesWritten += length;

KeReleaseSpinLock(&context->StatisticsLock,

oldIrql);

//

// ... rest of function ...

return;

}

In Figure 8, you can see that after validating and getting the pointer and length of the user data buffer, we acquire the spin lock we previously defined in our Device Context, by calling KeAcquireSpinLock. We then manipulate the statistics. When we’re done with updating the statistics, we release the spin lock by calling KeReleaseSpinLock.

Note that it’s entirely safe to use a spin lock here because we know, as a matter of WDF architecture, that the Device Context is always located in non-paged pool. And we know that this code will never run at an IRQL greater than DISPATCH_LEVEL (a specific requirement of using this particular type of spin lock).

If the only thing you need serialization for in your EvtIoXxx functions is the statistics, using manual serialization would be a far better choice than using Sync Scope.

Sync Scope Guidance

Given everything we’ve discussed so far, when does Sync Scope make the most sense? Different developers will, of course, have different opinions on this topic. Here at OSR, here are the guidelines we typically recommend:

- Recognize that Sync Scope is almost never the best method of serialization in a WDF driver. Think carefully about your alternatives before specifying a Sync Scope.

- Sync Scope can be an acceptable method in a production driver if:

- A great deal of code in your EvtIoXxx function requires serialization; OR

- The amount of time spent within the Sync Scope will be very low over time. By this, we mean that the your EvtIoXxx functions and any other functions within your Sync Scope run infrequently; OR

- You’re very new to the world of Windows driver development, and making your driver work correctly is more important that making your driver work optimally; OR

- Less than optimal performance – for your driver and the system on which your driver is running — is acceptable.

- Avoid using Sync Scope as a quick and dirty method of using a “big lock” model during driver bring-up. It’s long been our experience that building the proper serialization into a driver while the code is being written is far easier than retrofitting proper serialization to that driver afterwards. Ask us how we know this (blush).

- Sync Scope, extended to whatever functions you might require, can be a quick way to check for a race condition in your EvtIoXxx routines when you’re trying to chase-down a driver bug. Of course, this isn’t nearly as nifty as it sounds due to the inherent issues in using Sync Scope that we’ve discussed in this article.

Thus, the bottom line on Sync Scope is that it’s probably best if you use it only under some limited and special conditions. You know the saying, “If it sounds too good to be true, it probably is”? That’s the bottom line with Sync Scope.

Have fun, and be careful out there.

[…] Processing Callbacks) serialized on a per-Device or per-Queue basis. See more about Sync Scope in this article from The NT Insider. When you use Sync Scope, you may also elect to extend the chosen Sync Scope to […]