Big changes in the world of storage happen at the rate of about one per decade. Many of you will remember the IDE interface, which was later called ATA, and which was even later became known as PATA. The IDE/ATA/PATA interface was first documented in 1989, and it took until 1994 for the standard to be agreed upon and completed. About ten years later, in 2003, SATA was initially introduced.

Now it’s 2014 and SATA is nice, but it just isn’t optimal anymore. It’s time for a whole new interface. An interface that takes into account not only the advances in storage technology, but also the advances in how computers work these days. That interface is NVM Express, which everyone refers to as NVMe.

NVMe is a storage interface specification for Solid State Drives (SSDs) on the PCI Express (PCIe) bus. The standard defines both a register-level interface and a command protocol used to communicate with NVMe devices. An interesting aspect of NVMe is that, in current implementations, the NVMe controller and drive are fully integrated. So, you don’t buy an NVMe controller and then one or more NVMe drives. Rather, the drive and the controller form a single unit that plugs into the PCIe bus. While this may change in the future, all current NVMe products follow this model.

In this article, we’ll describe some of the technical features of NVMe focusing on how it’s been designed for one thing in mind: Speed.

Why It’s Cool – Getting Commands To and From the Device

So what’s cool about NVMe? The coolest thing about it is that everything in the spec has been designed with the goal of getting the most performance out of a storage device running on a modern computer system. The interface between the host and the SSD is based on a series of paired Submission and Completion queues that are built by the driver and shared between the driver (running on the host) and the NVMe device. Interestingly enough, the queues themselves can be located either in traditional host shared memory or in memory provided by the NVMe device.

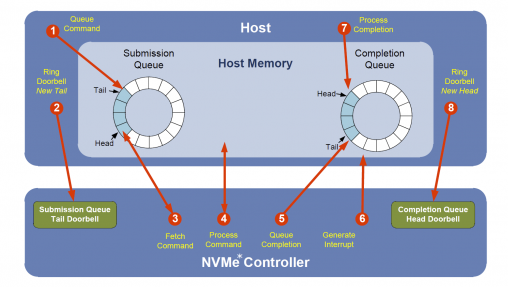

Once the Submission Queues and Completion Queues are configured, they are used for almost all communication between the driver and the NVMe device. When new entries are placed on a Submission Queue, the driver tells the device about them by writing the new tail pointer to a hardware doorbell register. This register is specific to the Submission Queue being updated.

When the NVMe drive completes a command, it puts an entry on a Completion Queue (that has been previously associated with the Submission Queue from which the command was retrieved) and generates an interrupt. After the driver completes processing a group of Completion Queue entries, it signals this to the NVMe device by writing the Completion Queue’s updated head pointer to a hardware doorbell register. This is shown in Figure 1 (for IDF 14).

There are separate queues for Administration operations (such as creating and deleting queue or updating firmware on the device) and for ordinary I/O operations (such as Read and Write). This ensures that I/O operations don’t get “stuck” behind long-running Admin activities. The NVMe specification allows up to 64K individual queues, and each queue can have as many as 64K entries! Most devices don’t allow this many, but Windows Certification requires NVMe devices to support at least 64 queues (one Admin Queue and 63 I/O Queues) for server systems and 4 queues (one Admin Queue and 3 I/O Queues) for client systems.

The advantage of allowing lots of entries per I/O Submission Queue is probably obvious: The ability to start many I/O operations simultaneously allows the device to stay busy and schedule its work in the most efficient way possible. This maximizes device throughput.

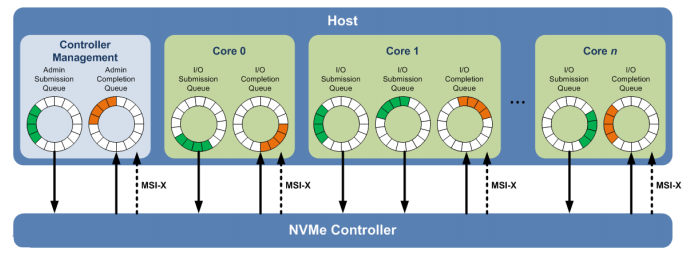

However, you might be wondering why having so many Submission Queues and Completion Queues is advantageous. The answer to this is surprisingly simple: On multiprocessor systems, the ability to have multiple queues allows you to have one Submission and Completion Queue pair per processor (core). Having a pair of queues that are unique to each core allows the driver to process I/O initiations and completions without having to worry about contention from other processors, and thus allows these actions to be performed almost entirely without acquiring and holding any locks. What makes this work particularly well is that NVMe strongly favors the use of MSI-X for interrupts. MSI-X allows the NVMe device to direct its interrupts to a particular core. This is such a significant advantage for NVMe that support for MSI-X is required for NVMe controller Certification on Windows Server systems. With MSI-X (and proper driver support), a request that is submitted by the NVMe driver on a given CPU will result in an interrupt indicating its completion on that same CPU. The drivers DpcForIsr will also run on that same CPU. And, the Completion Queue indicating the request’s status will be unique to that CPU. You have to admit: That’s very cool. Lock free, and optimal for NUMA systems as well.

But, wait, you say. If an MSI-X will be generated for each completion operation, won’t that create a lot of overhead? Well, yes, it will… IF the controller generates one interrupt for each Completion Queue entry it processes. But the NVMe specification includes native support for interrupt coalescing. Using this feature, the driver can programmatically establish interrupt count and timing thresholds. The result? Interrupts are automatically combined by the NVMe device, within driver defined limits.

Figure 2 illustrates a host that’s communicating with a controller via a pair of Admin queues that is used for all controller management operations, and Submission Queues that are setup for I/O processing per core. (This diagram has appeared in numerous NVMe presentations without citation and its origin is unknown. It is thus assumed to be in the public domain).

Another interesting option is that NVMe allows the driver to create multiple Submission Queues per core and establish different priorities among those queues. While Submission Queues are ordinarily serviced in a round robin manner, NVMe optionally supports a weighted round robin scheme that allows certain queues to be serviced more frequently than others. Figure 2 shows two Submission Queues configured for Core 1. Presumably, these queues would differ in terms of their priority. Of course, having a single additional Submission Queue on one specific core as shown in Figure 2 probably isn’t very realistic configuration, but it illustrates the point. And it sure looks great in the diagram.

The format of the queues themselves is designed for maximum speed and efficiency. Submission Queues comprise fixed size, 64 byte, entries. Each entry describes one operation to be performed by the device such as a Read, Write, or Flush. The 64 byte command queue entry itself has enough information in it to describe a Read or Write operation up to 8K in length. Transfers up to 2MB can be accommodated by setting-up a single, additional 4K table entry. Completion Queues are similarly efficient. Completion Queue entries are also fixed size, but only 16 bytes each. Each Completion Queue entry includes completion status information and has a reference to the Submission Queue entry for the operation that was completed. Thus, it’s relatively straight-forward to correlate a given Completion Queue entry with its original command.

Why It’s Cool – The Commands Themselves

If you’ve ever worked with SCSI, or even SATA, you know what a morass those command sets have become. There are a zillion different commands, many of them required, many of them with different options. NVMe specifically sought to simplify this situation. In fact, in NVMe there are only three mandatory I/O commands: Read, Write, and Flush. That’s it! There are an additional nine optional I/O commands, including “Vendor Specific.” All in all, that’s a pretty lean command set for performing I/O operations.

In terms of admin commands, which are used for configuration and management, there are only ten required and six optional commands (again, including “Vendor Specific”). The required admin commands include Create I/O Submission Queue, Delete I/O Submission Queue, Create I/O Completion Queue, and Delete I/O Completion Queue. There are also Identify, Abort, Get Log Page, Set Features, Get Features, and Async Event Request. That’s the whole list.

Needless to say, a small well-defined command set makes command processing pretty streamlined in the driver.

But there’s also a lot of power that can be optionally implemented using these few commands. For example, there are provisions for end-to-end data protection (what SCSI calls DIF and DIX). This feature allows (among other options) a CRC value to be stored with each logical block that’s written and compared to a computed value when the block is read.

A more forward looking feature is the Dataset Management (DSM) support that can optionally accompany Read and Write commands. DSM allows the data being written to be tagged with various attributes, including expected access frequency (one time write, frequent writes but infrequent reads, infrequent writes but frequent reads, etc.). Other information that can be specified includes whether the data is incompressible, or whether a read or write is expected to be part of a larger, sequential operation. While it might be a while before Windows (and even NVMe drives) supports all these features, it’s awesome to know that they’re there for future use.

And It’s Here Now

You might not realize it, but Windows began shipping with a driver that supports NVMe devices with Windows 8.1. NVMe devices themselves are also beginning to become available. Intel’s DC P3700 series drive is the first NVMe device to ship in volume to end users, and it’s widely available for sale.

But why should you care? Because NVMe isn’t just designed to be fast, it really is FAST, that’s why. According to our own testing (which confirms what Tom’s Hardware found), the Intel P3700 NVMe drives positively fly: Doing 4K random reads, we got 460,000 I/O Operations per Second (IOPs). Max sequential read throughput is about 2.8Gbps. These aren’t theoretical numbers, they’re real measurements. Now, granted that is only for read operations. Write operations are significantly slower, and depend a lot on the state of the drive when it’s being written. But still, there’s no denying NVMe is fast.

Considering the performance, the Intel drives aren’t impossibly expensive, either. You can get the 400GB version on Amazon for about $1200. Of course, as more NVMe drives come to market, we can expect the price to come way down. And, remember, this is an SSD device.

I was just telling Dan that I need at least two of these: One 800GB drive as my boot volume and another one for my paging file. Dan’s response? Well, I’m still waiting for him to answer my email.

[…] queue, completion queue, doorbell..etc which are typically used in a NVMe I/O operation, refer to this article for a basic understanding of it first before reading the below in order to make better sense. The […]