Last reviewed and updated: 10 August 2020

I wrote a blog post recently in which I tried to make the point (among others) that having a kernel-mode driver that is demonstrably working (and to the point where you never see it crashing or leaking) is not necessarily the same thing having a kernel-mode driver that is correctly written. Of course, anyone who’s programmed for ten seconds knows that a program that looks like it “works” doesn’t imply the program works correctly. But that’s not what I’m trying to say here.

“Working” Does Not Imply “Correct”

I’m trying to call attention to the fact that when you write a kernel-mode driver, the environment in which that driver code runs — the particulars of the system your code runs on and/or the implementation choices made in the current version of Windows or WDF – may allow your driver to run correctly. And this can make it seem that your driver was written correctly. However, unless your driver was written to carefully follow the architectural rules, when the environment in which your driver runs changes, you may get a big and very bad surprise. What had been “working right for months (or even years)” can all of a sudden stop working.

Windows kernel-mode development history is replete with these issues. For those fond of history, I’ll just mention things like the Windows DMA API model, Port I/O Space resources appearing in Memory Space, and the necessity (or not) of calling KeFlushIoBuffers after completing a DMA transfer. Perhaps the best example of what I’m talking about is how, back in the 32-bit world, devs frequently used ULONG when they meant PVOID. They weren’t then, and aren’t now, the same thing. But storing your pointers in an ULONG worked. You could test your code all day long and it would always work. But that didn’t make it correct. And when 64-bit Windows was introduced, a lot of people got that big, very bad, surprise and had to diagnose and fix their driver code. If they’d just done the right thing in the first place, they probably would have saved themselves some pain.

And so it is today. KMDF makes driver writing something that most any good C/C++ programmer can do, with very little experience and even less knowledge of Windows kernel-mode architecture. You Google around, you start with some sample code from the Internet, you ask a few questions on NTDEV, and bingo! Your driver practically writes itself.

Or not. And before you think I’m singling out the more “casual” Windows driver devs, this problem is absolutely not restricted to them. Even experienced Windows driver specialists – folks who write Windows drivers every day for a living – sometimes forget, or just don’t know, some of the more arcane architectural rules of the road. That’s because, no matter how simple it seems, kernel-mode programming is hard and there are a lot of little issues about which you must be aware.

What Our Code Reviews Tell Us

One of the services we provide here at OSR are design and code reviews. We review a lot of drivers over the course of a year. And we see all types of drivers: Software-only kernel services that collect data and return it to user mode, file system filters, drivers for programmed I/O devices, and drivers that support busmaster DMA devices. We rarely see drivers that plainly don’t work (although we do see some!). But we do see a lot of architectural errors.

So that you don’t have to hire us to look at your code to find these errors for you, we’ll describe for you the three most common errors that we see in KMDF drivers. And we’ll also talk about how those errors can be fixed. We see these errors in almost equal numbers, so we’ll present them in no particular order.

Common Error #1: Errors Involving Process Context Assumptions

The common architectural error that makes me the most crazy has to do with assuming the context in which an Event Processing Callback is executing. In KMDF, EvtIoXxx Event Processing Callbacks are called by the Framework, for a particular Queue, to provide the driver with a Request to process. So, for example, when a Queue has a read type Request to pass to the driver, the Framework will call the EvtIoRead Event Processing Callback specified by the driver for that Queue.

In many relatively sophisticated drivers, we see code similar to that shown in Figure 1.

A quick look at this will show you this code is reasonably carefully written. It frames the call to MmMapLockedPagesSpecifyCache in a try block. It handles the exception appropriately. And, though you can’t see it in Figure 1, I can tell you that this code even cleans-up correctly at the “done” label.

// Map the device memory into the user's virtual address space.

//

// Note: Mapping to UserMode can raise an exception. We protect against

// that by doing the mapping within a try block.

//

__try {

MappedUserAddress = MmMapLockedPagesSpecifyCache(

MdlAddress,

UserMode,

MmNonCached,

NULL,

FALSE,

NormalPagePriority);

}

__except (EXCEPTION_EXECUTE_HANDLER) {

MappedUserAddress = NULL;

}

//

// Did that work?

//

if (MappedUserAddress == NULL) {

//

// No! The only reason it could fail is lack of PTEs.

//

status = STATUS_INSUFFICIENT_RESOURCES;

goto done;

}

So what’s not to like?

Note the specification of “UserMode” as the target address space (what the docs refer to as “access mode”) into which to map the memory.

What’s not to like is that EvtIoXxxx routines are, by architectural definition, always called in an arbitrary process and thread context. Recall that the phrase “called in an arbitrary process and context” just means that we can’t know in advance the context the driver will be called in. In other words, we don’t know what user-mode program will be the “current thread” when we’re called.

It’s not like this architectural constraint is some sort of secret. The WDK docs explicitly mention this under the topic of Request Handlers:

Can’t be more clear than that, right? And of course, an arbitrary process and thread context is not acceptable when you call MmMapLockedPagesSpecifyCache, given that the entire point of this call (when the AccessMode parameter is set to UserMode) is to map the buffer into the user-mode address space of a specific process. So, in order to be able to call MmMapLockedPagesSpecifyCache effectively, the caller (the driver’s EvtIoXxxs routine in this case) must be running in the context of the process into which the memory is to be mapped. It’s not like this is a secret either, as the WDK docs for the function clearly show in the Remarks section:

To further emphasize this fact, there’s a specific EvtIo Event Processing Callback this is guaranteed to be called in the context of the requesting process. That routine is EvtIoInCallerContext. So, there is a way to get the behavior that the dev of the code in Figure 1 is seeking, it’s just that that way is not from within the EvtIoRead Event Processing Callback.

So, you may ask, if EvtIoRead runs in an arbitrary process context, and MmMapLockedPagesSpecifyCache relies on running in a specific process context to be effective, how does the code shown in Figure 1 ever work?

The answer to that is: It only works because of the way KMDF happens to currently be currently written. If your driver is called directly from a user-mode application, and if several other conditions apply, KMDF can wind-up calling your driver in the context of the process that sent the I/O Request. You can see the exact sequence of events, and the conditions under which KMDF will call your driver in the context of the requesting process, in the WDF source code on GitHub. If you’re curious about the details, walk through the code for the method FxIoQueue::DispatchEvents and you’ll see exactly what I’m talking about.

You can test your driver all day, and it’ll work. But if there’s a change in how your driver code is executed – like, for example, you device hasn’t been powered-up yet when a Request arrives – or if you make what seems to be a small change in your driver’s code – let’s say you add a SynchronizationScope to your Queue, change your Queues Dispatch Type, or you decide to allow your device to automatically idle in low power state – all of a sudden, your driver no longer works.

That’s not the extent of the problem, either. Your driver’s behavior could become broken very easily by some small change to KMDF by the development team.

And because the code you’ve written is fragile, cutting and pasting it into some other driver, where the implementation constraints that happen to be present in your original driver that allow it to work may not necessarily be true, will propagate the problem and potentially yield a non-working solution.

So the moral of the story is: EvtIoXxx routines (except EvtIoInCallerContext, mentioned above) are called in arbitrary process and thread context. The fact that your driver happens to be called sometimes in the specific thread context that you want does not change this fact. You have to follow the architectural rules. Proper operation doesn’t mean correct implementation.

Common Error #2: Errors Involving IRQL Assumptions

My second-favorite bug, and – again – one that we see all the time, is making invalid assumptions about the IRQL at which various KMDF callbacks will run. We most frequently see this in EvtIoXxx Event Processing Callbacks and in Request Completion Routines.

By default, both EvtIoXxx Event Processing Callbacks and Request Completion Routines are architecturally defined to run by default at any IRQL less than or equal to IRQL DISPATCH_LEVEL. Despite this, the drivers that we review often assume that these routines will run at IRQL PASSIVE_LEVEL.

These assumptions take many forms. Sometimes the code for these routines is defined to be pageable (arrgh… a particular hot-button of mine). Sometimes, you see this code serialize access to shared data structures with a synchronization mechanism, such as a mutex, that assumes the code is running at an IRQL less than IRQL DISPATCH_LEVEL. Or, sometimes, we see something in the EvtIoRead Event Processing Callback like the code in Figure 2:

// Send the request synchronously with an arbitrary timeout of 60 seconds

//

WDF_REQUEST_SEND_OPTIONS_INIT(&SendOptions,

WDF_REQUEST_SEND_OPTION_SYNCHRONOUS);

WDF_REQUEST_SEND_OPTIONS_SET_TIMEOUT(&SendOptions,

WDF_REL_TIMEOUT_IN_SEC(60));

Status = WdfRequestAllocateTimer(IoctlRequest);

if (!NT_SUCCESS(Status)) {

goto TestReadWriteEnd;

}

if (!WdfRequestSend(IoctlRequest, IoTarget, &SendOptions)) {

Status = WdfRequestGetStatus(IoctlRequest);

}

if (NT_SUCCESS(Status)) {

*IoTargetOut = IoTarget;

}

In Figure 2, you see the driver calling WdfRequestSend having previously specified that the Send operation be performed synchronously. Two minutes of thought will bring to your attention the fact that, in order for the Send operation to be synchronous, the caller will have to wait. That wait will be on a Windows Dispatcher Object, and waiting on a Dispatcher Object requires that the caller be running below IRQL DISPATCH_LEVEL. Ooops.

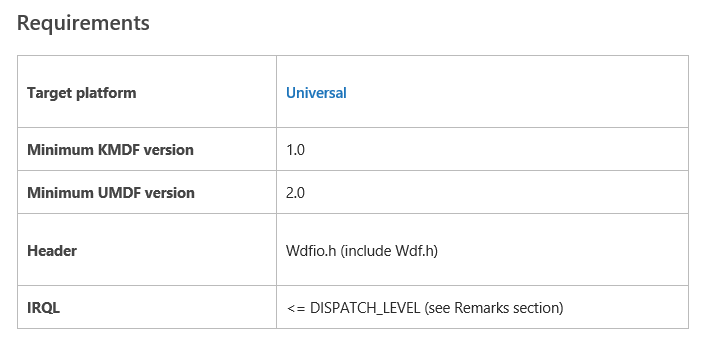

Again, there’s no reason that dev who owns this code should reasonably assume that their driver’s EvtIoRead Event Processing Callback function will be called at IRQL PASSIVE_LEVEL. This is very clear in the WDK documentation. It’s even in the cute little table they provide on the page for that DDI (See Figure 3).

And, to be clear, there’s a method that a driver dev can use that will guarantee that their EvtIoXxx Event Processing Callbacks will execute at IRQL PASSIVE_LEVEL, if that’s what they want. That method is to establish an ExecutionLevel Constraint of WdfExecutionLevelPassive prior to creating the WDFDEVICE or WDFQUEUE. The WDK docs even mention this explicitly.

However, in most cases, you’ll be able to test and run your driver for days (or longer!) and you’ll never see EvtIoRead called at anything other than IRQL PASSIVE_LEVEL. Why? Because that happens to be the way KMDF is currently written. But… make a small change in your code, and you can wind-up with a blue screen. For example, in most cases setting Synchronization Scope will (by default) cause your driver’s EvtIoXxx functions to be called at IRQL DISPATCH_LEVEL. Surprise! Ooops.

So, what’s the ultimate lesson here? EvtIoXxxx Event Processing Callbacks can run at any IRQL less than or equal to IRQL DISPATCH_LEVEL. And this is true regardless of how many times you try it, and your callbacks happen to execute at IRQL PASSIVE_LEVEL. If you want your Event Processing Callbacks to run at IRQL PASSIVE_LEVEL, all you need to do is establish an ExecutionLevel Constraint of IRQL PASSIVE_LEVEL, and you’re architecturally in the clear.

Common Error #3: Errors Involving I/O Targets

I could, and I think at some point I have, written entire articles on the many ways that WDF I/O Targets can be unintentionally abused and misused. But, by far, the most common error we see is that shown in Figure 4.

WDF_REQUEST_SEND_OPTIONS_INIT(

&RequestSendOptions,

WDF_REQUEST_SEND_OPTION_SEND_AND_FORGET);

WdfRequestFormatRequestUsingCurrentType(Request);

Result = WdfRequestSend(

Request,

devContext->MyIoTarget,

&RequestSendOptions);

if (Result == FALSE) {

Status = WdfRequestGetStatus(Request);

WdfRequestComplete(Request, Status);

}

The code in Figure 4 is either 100% correct or 90% incorrect. And on what does this depend? It depends on whether the I/O Target that’s being used is a Local I/O Target or a Remote I/O Target.

The architectural rules for WdfRequestFormatRequestUsingCurrentType require the I/O Target that’s being used to be the driver’s Local I/O Target only. It is not valid to call WdfRequestFormatRequestUsingCurrentType on a Request that’s being sent to a Remote I/O Target. Again, the WDK docs for this function make this abundantly clear:

The WdfRequestFormatRequestUsingCurrentType method formats a specified I/O request so that the driver can forward it, unmodified, to the driver’s local I/O target.

When you want to forward a Request to a Remote I/O Target, you always need to format it explicitly for a particular I/O Target. This means you’ll need to call a WDF I/O Target function, one that starts with WdfIoTargetFormatRequestForXxxx. You’re not allowed to take the WdfReuqestFormatRequestUsingCurrentType shortcut, if the I/O Target is a Remote I/O Target.

But there’s more. In many cases, you’re not allowed to specify _SEND_AND_FORGET when you’re sending a Request to a Remote I/O Target. In this particular case, the WDK docs for this option really aren’t so very clear on this point. But the correct documentation is indeed provided:

Your driver cannot set the WDF_REQUEST_SEND_OPTION_SEND_AND_FORGET flag in the following situations:

…

- The driver is sending the I/O request to a remote I/O target and the driver specified the WdfIoTargetOpenByName flag when it called WdfIoTargetOpen.

- The driver is sending the I/O request to a remote I/O target, and the driver specified both the WdfIoTargetOpenUseExistingDevice flag and a TargetFileObject pointer when it called WdfIoTargetOpen.

…

The big one to notice here is the first restriction, above. Many times a Remote I/O Target is opened, it’s opened by name.

But, as with our previously described cases, when this code works for a given situation it will almost always stay working indefinitely. The reason is because of an optimization in KMDF. But if the environment changes even just slightly, let’s say your Remote I/O Target gets a filter driver loaded on top of it, you’ll get a blue screen. Not a good thing for throughput, that.

The lesson from this third class of error is to be very careful, and very particular, about how you use WDF I/O Targets. Read the docs carefully. Read the article I wrote that provides a set of rules that you can follow so you won’t make a mistake. Be sure that the WDK documentation explicitly allows the combination of DDIs and operations you’re attempting to perform. And remember: whenever you’re using Remote I/O Targets, you need to specifically format the Request for that specific I/O Target using a call of the form WdfIoTargetFormatRequestForXxxx.

Catching the Problems Before They Ship

The problem with the errors I’ve described is that, as we discussed, no amount of testing will typically reveal them. However, in some cases, there are methods (short of a code review by an experienced Windows driver developer) that might help you to find them.

Your best chance to find these errors is to follow best practices for driver validation. For static validation, these practices are:

- Always run Code Analysis on your driver code. Set Code Analysis to run on your driver at the “Microsoft All Rules” level every time you do a build, in both Debug and Release configurations.

- Run Static Driver Verifier (SDV) with the “All” Rule Set enabled, as soon as your driver is reasonably working, and frequently during your development process. Yes, SDV did at one point suck. Yes, a few years back, it was a time wasting exercise. However, SDV has been worth its weight in gold for at least the past few years.

If you are not using both of these static analysis tools in detail, not only are you not following best practices, you’re not following the minimum standard for doing acceptable kernel-mode engineering. And when you do run these tools, take the time to carefully review their results. Neither of these tools is like Lint. Their goal is not to throw-up 65 million false positives so that you have to wade-through a ton of crap. If you take the time to really examine the results from these tools, you’ll find that more often than not they’re smarter than you are. We have a famous case here at OSR where one of our devs spent 40 minutes trying to find the bug that SDV was complaining about. And when he did find it, it was a real, actual, bug – and damn subtle as well.

In terms of dynamic testing, you absolutely must test your code with WDF Verifier enabled. Just turn WDF Verifier on for your driver on your target test machine, and leave it on during your development process. Enable Verbose tracing, and trace at the “Warnings and Errors” level. Here at OSR, we set the WDF Verifier options in the driver’s INF file, so that whenever our test team is exercising our driver code, WDF Verifier is also running.

Together, these tools won’t necessarily catch all the architectural errors I’ve described in article. But the tools are constantly improving. Your best chance of identifying problems that you’re not aware of is with these tools. And if the tools report some weird thing that you don’t understand, post a question to NTDEV, and the good folks there will try to help you out.

Conclusion

The nature of kernel-mode development is that it is complex and that it assume a lot of prerequisite knowledge. This makes it hard when you don’t know what you don’t know. The best way to counter these problems is to do whatever you can to make yourself knowledgeable about Windows driver development (may I recommend you take a class?). Read the WDK docs carefully. Do your research. Use all the tools that are available to you.

And, most of all, never assume that just because your driver works when you test it – even if you think you test it “really, really, well” – that your driver is correct. Because, well… maybe it’s not.